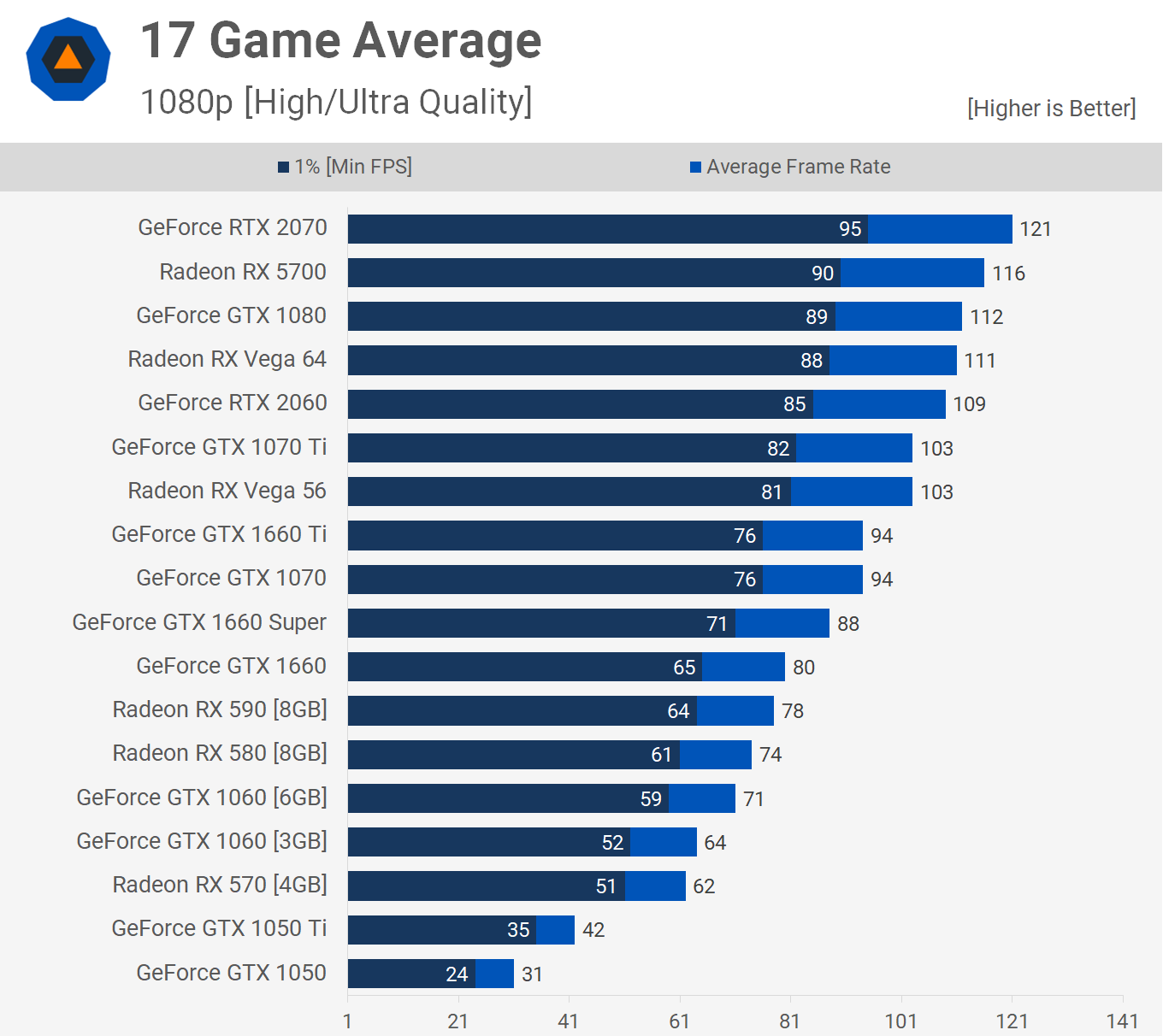

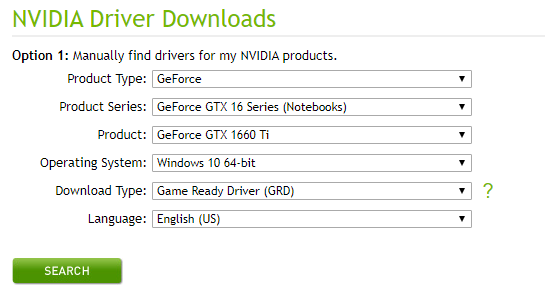

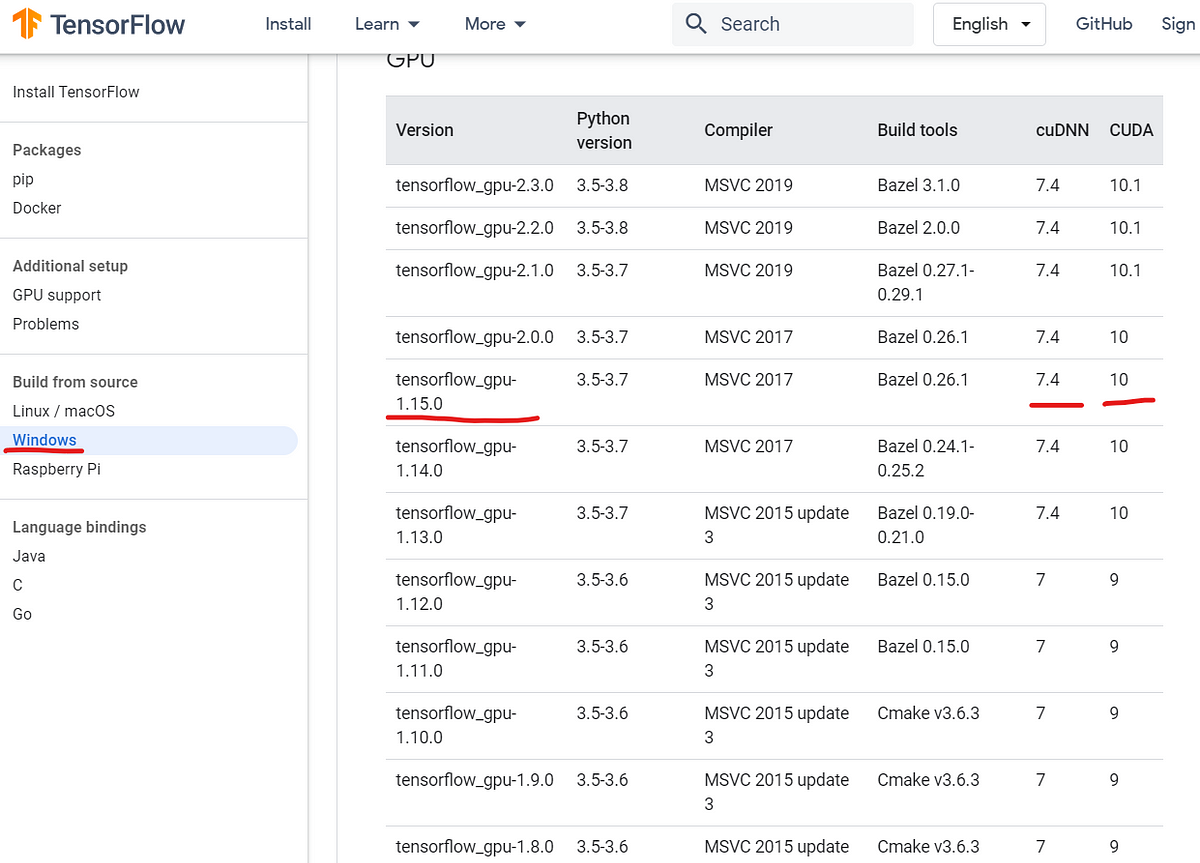

Install Tensorflow-GPU 2.0 with CUDA v10.0, cuDNN v7.6.5 for CUDA 10.0 on Windows 10 with NVIDIA Geforce GTX 1660 Ti. | by Suryatej MSKP | Medium

Asus GeForce GTX 1660 Super Phoenix Fan OC Edition 6GB HDMI DP DVI Graphics Card : Amazon.sg: Electronics

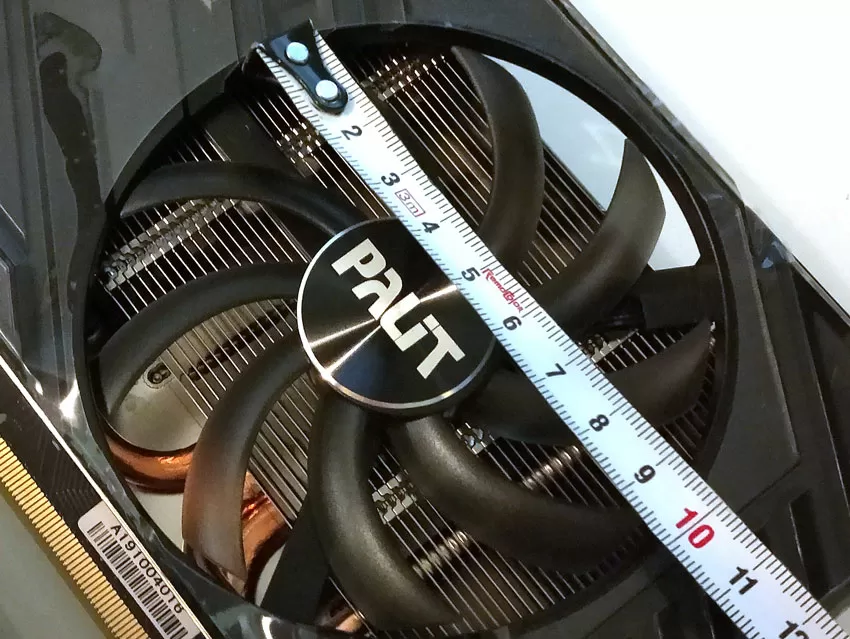

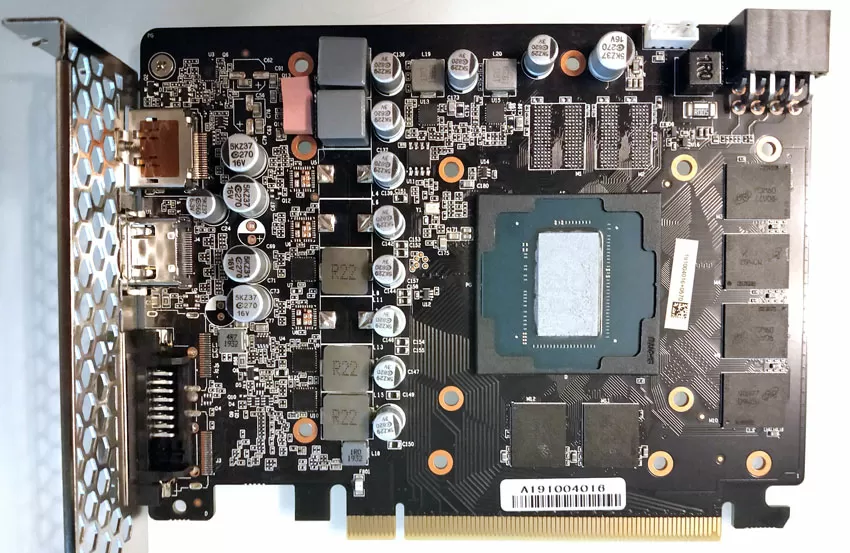

Palit GeForce GTX 1660 Super review: testing a novelty in computing and machine learning | hwp24.com

Installing TensorFlow, CUDA, cuDNN for NVIDIA GeForce GTX 1650 Ti on Window 10 | by Yan Ding | Analytics Vidhya | Medium

Installing TensorFlow, CUDA, cuDNN with Anaconda for GeForce GTX 1050 Ti | by Shaikh Muhammad | Medium

Windows 11 and CUDA acceleration for Starxterminator - Page 4 - Experienced Deep Sky Imaging - Cloudy Nights

Palit GeForce GTX 1660 Super review: testing a novelty in computing and machine learning | hwp24.com